Mobile Testing Strategy

I view testing as a spectrum. At one end of this spectrum all testing is done manually. At the other end all tests are automated. Somewhere between these two extremes lies a good balance. To find that balance, we need to be able to identify parts of an application that can be effectively tested by the right type of test. If a system is designed with a good separation of concerns in mind by creating explicit boundaries around the core business logic, it becomes much easier to identify those parts.

In this blog post we will explore the roles of three different types of tests: unit, integration, and end-to-end in verifying the behavior of a well architected application.

Architecture #

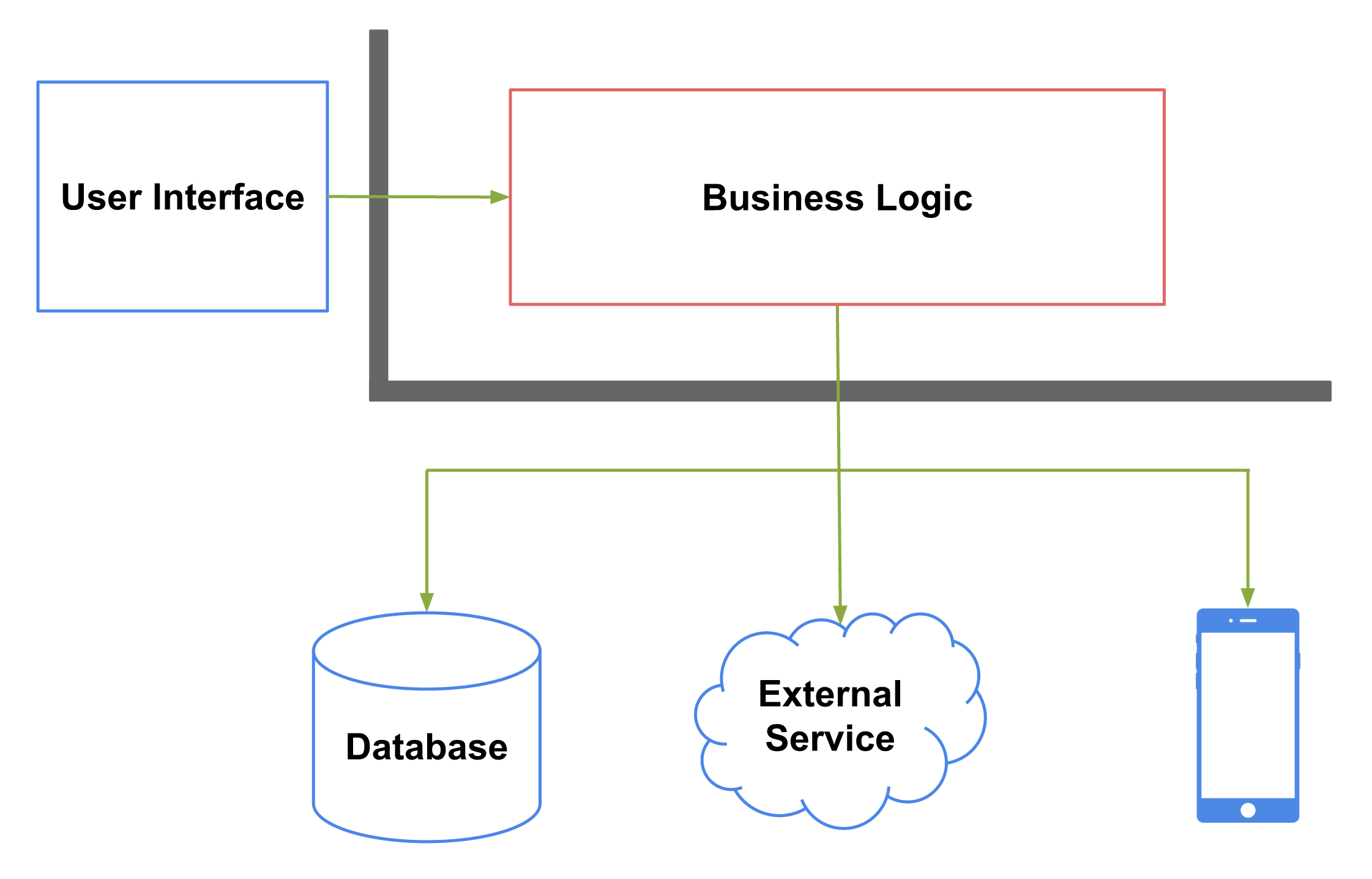

Most applications tend to have the following main parts:

- User Interface

- Business Logic

- Local Database

- Components that interface with remote services

- Components that interface with services provided by the operating system

Figure below shows how these parts can be arranged so that we can achieve a high degree of separation of concerns.

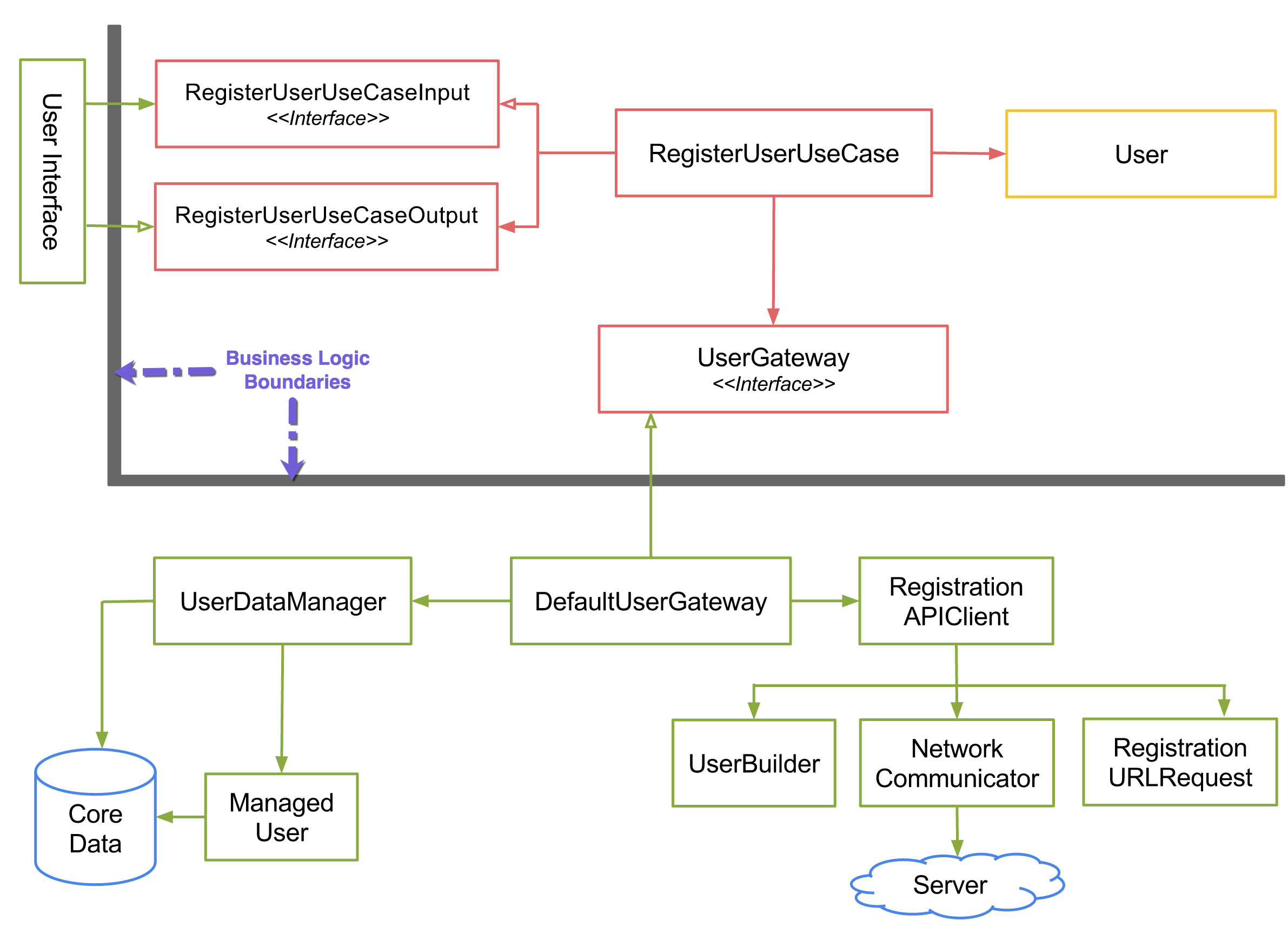

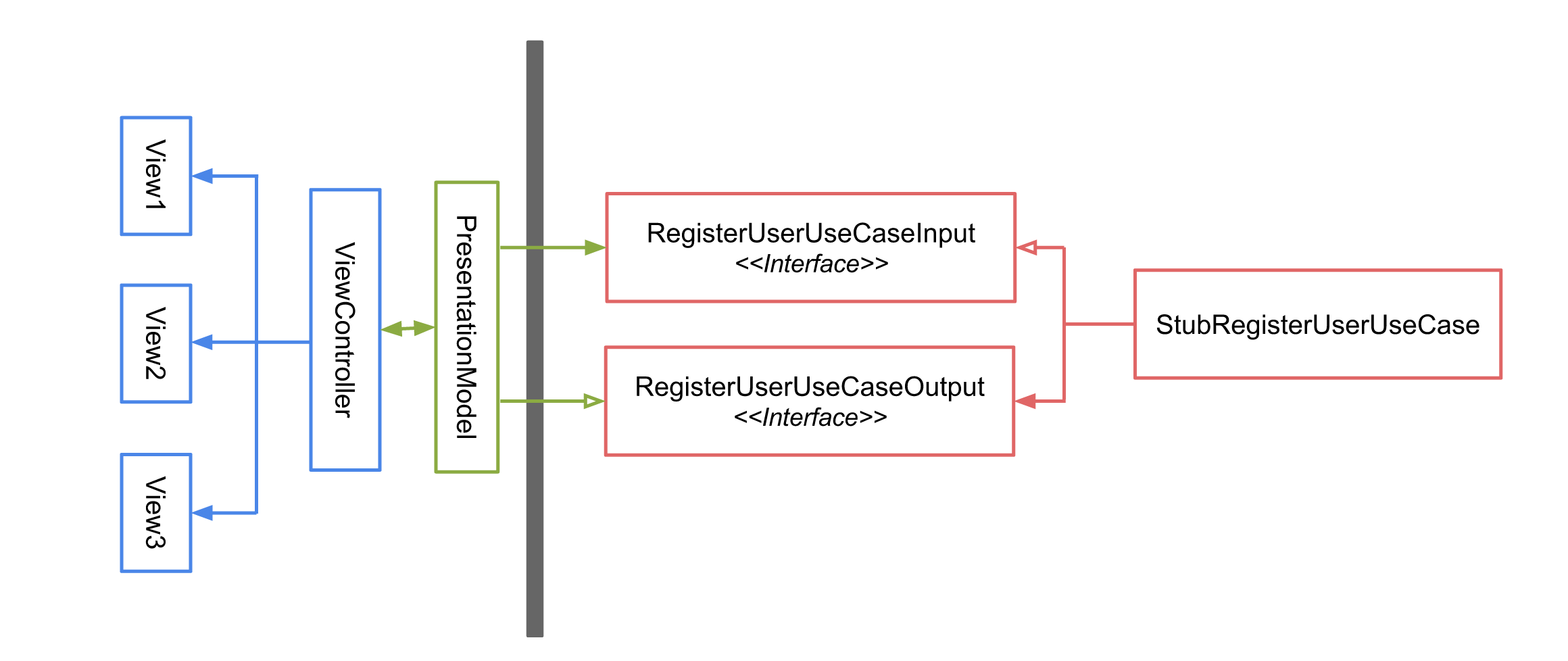

To make things a bit more concrete, let’s examine a use case from a fictitious registration subsystem. This subsystem is responsible for, among other things, creating an account from the information entered by the user. Figure below shows various components involved in this use case.

The overall structure presented in the figure below is inspired by the Clean Architecture. There are other architectural patterns that too can help us separate concerns in an application, for example, Hexagonal Architecture, DCI Architecture, Functional Core, Imperative Shell, and Domain Driven Design.

“A use case defines a specific behavior of a system from the point of view of a user who has told the system to do something in particular.” - Agile Software Development, Principles, Patterns, and Practices

Use cases often need to work with external services, databases and the operating system to accomplish a task. However, we don’t want the use case to be affected by changes to such externalities. We can use gateways to hide the outside detail. The gateways convert data retrieved from external sources to a format most convenient for the use case. A broader implication of using these gateways is that the high level application logic doesn’t need to depend on low level modules. Instead the low level modules depend on an abstraction defined by the high level application logic.

More information about this approach of inverting dependencies can be found in a seminal paper written by Uncle Bob Martin.

Unit Tests #

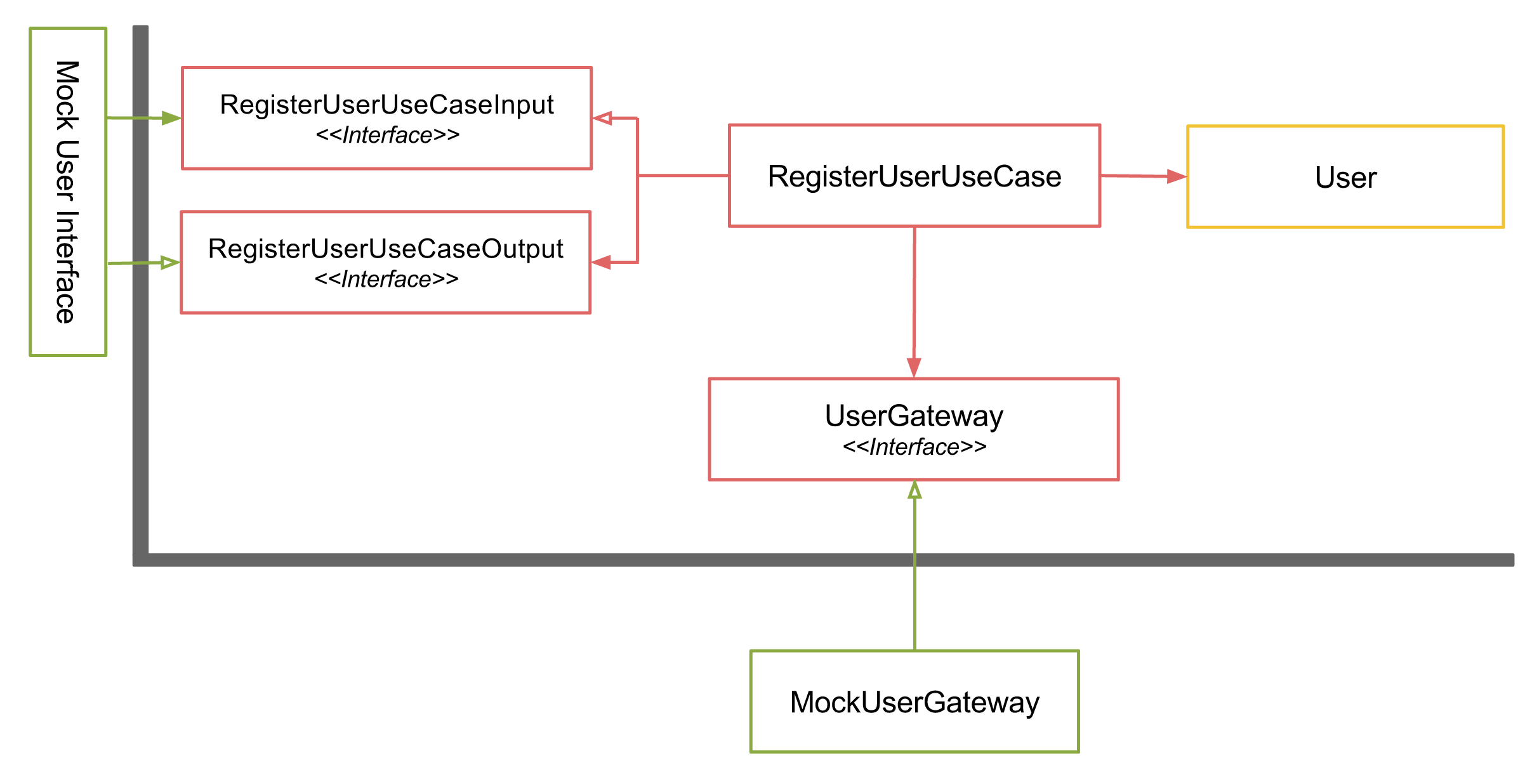

Unit tests are low-level tests that verify the behavior of a small part of the system. They are expected to be deterministic - tests that always pass unless there are noticeable changes in the code. The most common approach for making unit tests reliable is by stubbing external dependencies such as databases, network requests, etc. Stubbing external dependencies also makes them run significantly faster than other kinds of tests.

To make the tests for RegisterUserUseCase class deterministic, we need to provide a mock user gateway that acts like a real gateway but doesn’t interact with a real database or an external service. We can then verify that the mock gateway received expected messages to make sure that the use case is behaving correctly. Since we have defined clear boundaries through which the user interface or any other delivery mechanism will interact with the use case, we can also mock those boundaries (e.g., RegisterUserUseCaseInput and RegisterUserUseCaseOutput) to verify that the use case returns an expected value.

Unit tests can also be used to verify the behavior of components that lie outside the business logic boundaries. The UserBuilder class is designed to build an User entity from the JSON response sent by a remote registration service. It is a simple data-in-data-out class. Unit tests are perfect for verifying the behavior of these types of classes. We can test many scenarios including subtle edge cases very quickly. RegistrationURLRequest is another class outside the business logic boundary that can be fully tested using unit tests.

Integration Tests #

It is not sufficient to test only the business logic through unit tests. We need to also verify the components that retrieve data or submit requests to external resources work as expected. We can use integration tests for that purpose. They are also known as service-level tests. In general, integration tests identify problems that occur when units are combined. They also tend to identify configuration related problems, for example the database not being setup correctly.

Before we begin to write integration tests, we need to identify various integration points in our application. It is not that difficult to identify them. For example, in the use case diagram listed above, RegistrationAPIClient and UserDataManager classes act as façades to a remote registration service and a local database respectively. Therefore, the entry point for our integration tests will be these two classes.

Testing Database Integration #

It is important to make sure that when a data manager class is asked to save something into the database, it does so without any error. The tests for UserDataManager class should hit the real database and verify that the data is saved correctly. Similarly, we need to verify that when we issue a query, the data manager returns correct data matching the query.

Testing External Services Integration #

Although we have already verified that UserBuilder and RegistrationURLRequest classes behave as expected through unit tests, we still need to make sure that RegistrationAPIClient collaborates with all three classes including NetworkCommunicator to

submit a proper request to a remote service. To properly verify this collaboration, we shouldn’t stub any classes involved. This could mean letting the network request hit a real API server. If testing against the real server is not feasible, we can create a fake server using dyson that allows us to return a fake JSON response. We can even use something like Nocilla to stub HTTP requests altogether if we don’t want our tests to hit any kind of server directly.

Testing System Services Integration #

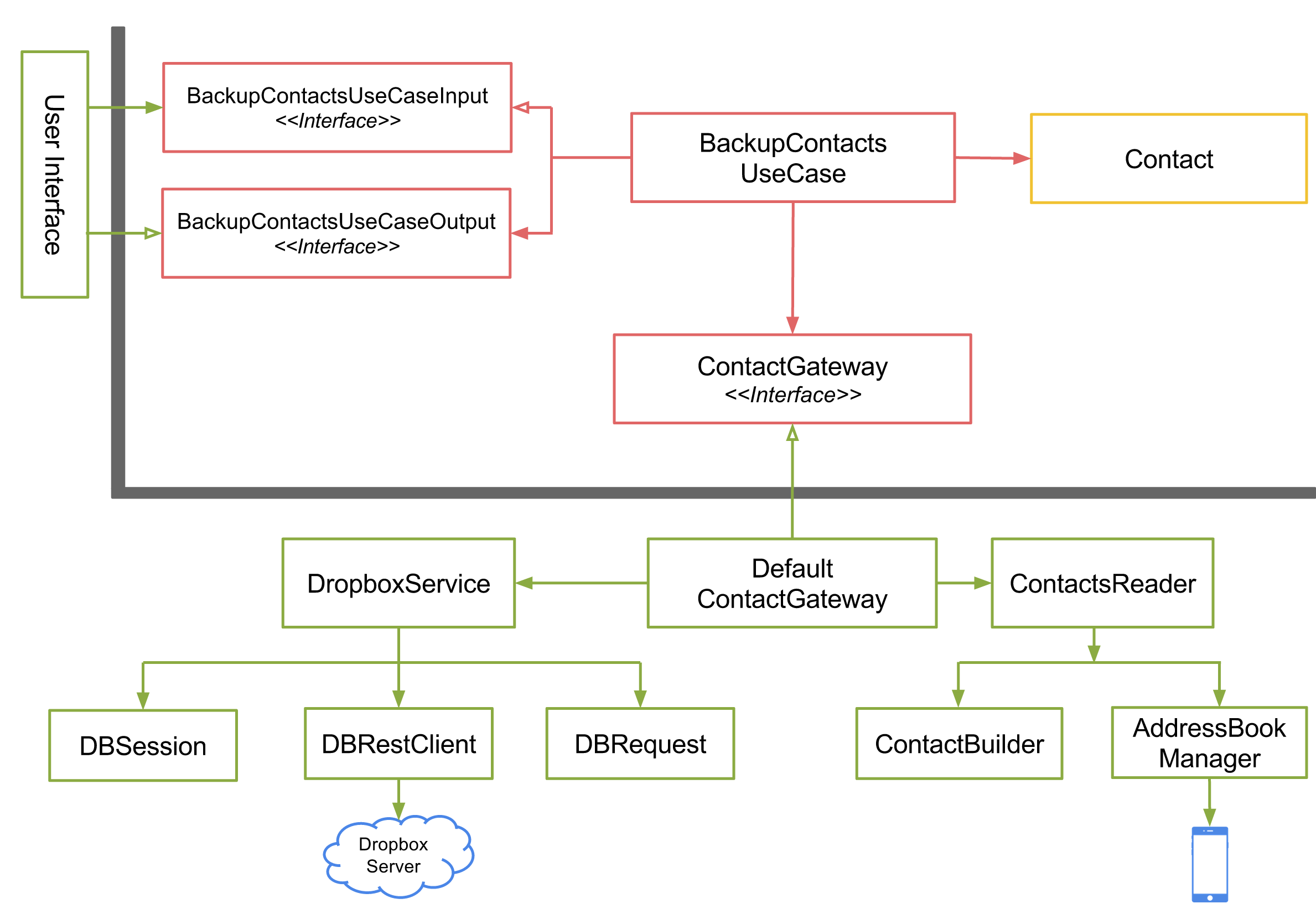

Let’s consider a different use case to understand how we might go about testing components that integrate with services provided by the operating system. This use case reads contacts from a device and uploads them to Dropbox. Figure below shows various components involved in this use case.

One important thing to verify here is that ContactsReader class reads information from the address book correctly. One way of achieving that is by pre-populating the address book with dummy contacts and verifying that the Contact entities returned by ContactsReader contain correct data. The integration tests for ContactsReader should let AddressBookManager read information directly from either a device or a simulator.

An operating system provides a multitude of services that are very different in nature. Some of these services might be difficult to test. For example, on iOS how do we verify that a local notification issued by an app is indeed displayed to the user? iOS doesn’t allow third-party apps to inspect what is displayed on the notification tray. Perhaps we could make a compromise and settle for verifying that the notification has been scheduled by querying the UIApplication object.

Further, we can identify hard-to-test system services being used in our application and create detail manual test plan to verify their behavior. As long as we have a good automated test coverage for the majority of our code base, we should be able to focus our manual testing effort on areas that are difficult to verify through automated tests.

One question that gets asked quite often is - shouldn’t we trust the services provided by the operating system and not worry about verifying their behavior? It is a valid question. There are two aspects to these types of integration tests:

- Verifying that our application calls and responds to the system services in a correct way

- Verifying that the system services themselves work as expected

If we are only after #1, we could verify that the objects provided by system frameworks received correct messages. We can use mocking frameworks such as OCMock to facilitate this type of verification. However, this technique might not work smoothly on languages that don’t provide a good mocking framework, for example Swift. An alternative is to use the Subclass and Override Method. If framework objects are injected through a public interface, we can override the methods that make a call to system frameworks and verify that the framework objects received correct messages.

More information about the Subclass and Override Method technique can be found in Working Effectively with Legacy Code.

But if we need to also verify #2, then we need to perform a full integration test. This need tends to arise more often in mobile applications. The system services might return different values depending on what type of device the operating system is running on.

Presentation Tests #

To understand the role of presentation tests, let’s explore the presentation layer architecture for registration first. Generally, the user interface is built by piecing together various views and controls provided by a framework. A view controller is used to coordinate the communication between views and controls. Therefore, the view controllers tend to acquire most of the presentation logic. This makes testing hard because we need to manipulate the state of the views directly which could be challenging.

We can use the presentation models to simplify the UI testing. All presentation logic that represents the data and behavior of the UI window can be extracted out of view controllers into the presentation models. A view controller then simply tells the views to project the state of a presentation model onto the screen. The presentation model also coordinates with use cases to read data produced as a result of executing the business logic. This makes the presentation model a data-in-data-out class. Unit tests are perfect for verifying the behavior of these types of classes.

One interesting thing to notice here is that since we have defined explicit boundaries around the use cases, we can easily provide a stub implementation that returns values most suitable for testing the presentation logic.

By extracting the presentation logic into a separate class, we have essentially turned the view controllers into dumb objects that read data from presentation models and pass them onto the views and controls. Since the view controllers are very stupid, there is little that can go wrong. Most UI related bugs should be caught by the tests for presentation models. Therefore, we don’t need to test the view controllers.

Sometimes a need might arise to verify that the final UI implementation looks exactly like what was conceived by the design team. In that case, we can write extensive tests using tools such as UIAutomation to verify that certain UI elements are present in the window.

End-to-End Tests #

Although we have tested each part in isolation, we still need to make sure that the application works as a whole. We can write end-to-end tests to achieve that. They are also known as functional or acceptance tests.

End-to-end tests treat the application as a black box. Therefore, we shouldn’t stub any external services, databases or system services in these tests. End-to-end tests are generally initiated via the UI. On iOS, for example, these tests can be written in UIAutomation. But if it is difficult to test through the UI itself, we can use the subcutaneous tests. These tests avoid difficulties with hard-to-test UI frameworks by operating just under the UI. They also tend to be much faster than testing through the UI. Since we have already extracted the presentation logic out of the view controllers into presentation models, writing subcutaneous tests shouldn’t be too difficult.

It is important to keep in mind that end-to-end tests take longer to run. Also, finding the root cause for a failing end-to-end test can take a long time. As a result, developers will have to wait much longer to find out if their implementation is correct.

End-to-end tests also tend to be quite brittle. A slight change in the UI could make them fail even though the overall behavior didn’t change. Because of these reasons, writing and maintaining these tests is costly. Therefore, we shouldn’t write too many of them.

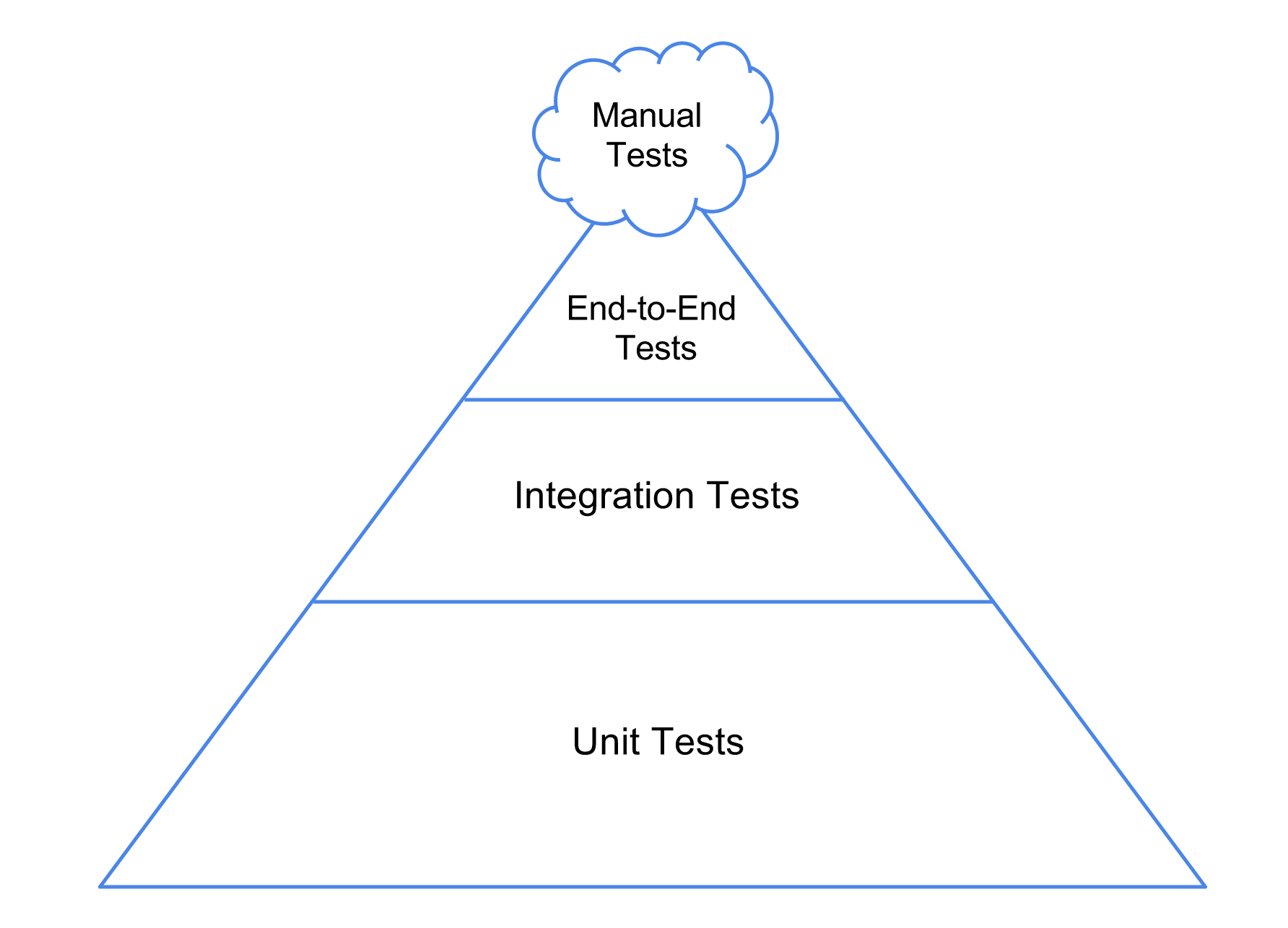

In order to build an efficient feedback loop we need to verify majority of our application’s behavior using unit tests. Figure below shows the famous testing pyramid illustrating the desired proportion of each type of test.

Conclusion #

Devising an effective testing strategy for a complex application could be quite overwhelming. But if an application is architected with a good separation of concerns in mind, we can test different parts individually. The business logic can be fully tested using unit tests. Parts that interface with external services, local database, and the operating system can be tested using unit and integration tests. The presentation logic behind the user interface can be tested using unit tests. Finally, to verify that all components work correctly as part of the overall system we need to write some end-to-end tests.

Further Exploration #

- Test iOS Apps with UI Automation: Bug Hunting Made Easy

- Agile Testing: A Practical Guide for Testers and Agile Teams

- The Deep Synergy Between Testability and Good Design

- Architecture the Lost Years